Compound Leadership: Managing AI Agents Without Losing Control

The Leadership Framework for Managing AI Agents Without Losing Oversight or Control

You probably have two voices in your head right now.

“AI agents will make everything faster.”

“But what if they break something… or worse, I lose control?”

Both are right.

Agents multiply capability, and vulnerability. The leaders who thrive in this new era won’t just practice compound engineering, a concept I first heard from Kieran Klaassen of Cora to describe how teams can codify their workflows so AI systems can replicate and improve them.

They’ll evolve that thinking into something broader, compound leadership: the disciplined practice of scaling human judgment through secure, transparent collaboration between people and AI systems. It builds on the principles of compound engineering but extends them to include governance, accountability, and organizational learning.

In short, compound leadership is how you move fast without losing control.

From Compound Engineering to Compound Leadership

Compound engineering scales execution.

Compound leadership scales judgment.

It means you:

Understand your baseline—what your team, data, and systems can safely support.

Build environments that control access, log every action, and maintain human oversight.

Use agents as explorers—running multiple paths, learning from mistakes, and codifying lessons.

Agents aren’t employees, but leadership principles still apply—know what they’re good at, where they falter, and how to keep their output aligned with your goals.

Sometimes, the best use for AI in your environment isn’t the common use, it’s the one that fits your risk profile and mission. Thoughtful deployment beats flashy automation every time.

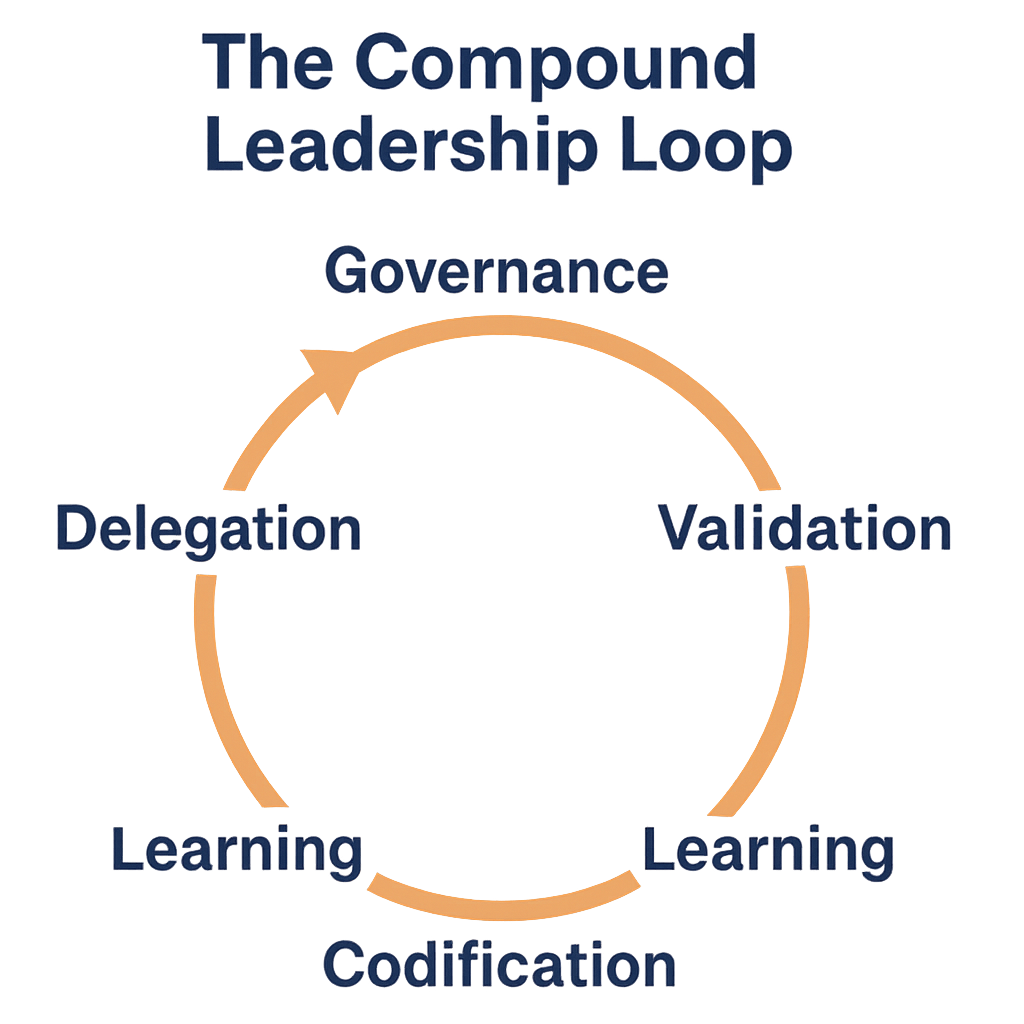

The Compound Leadership Loop

At the core of this new mindset is the Compound Leadership Loop—a continuous cycle of:

Governance → Delegation → Validation → Learning → Codification → Governance.

Each stage strengthens the next:

Governance defines values, rules, and security boundaries.

Delegation activates people and agents within those guardrails.

Validation keeps humans accountable for outcomes.

Learning captures insights and errors.

Codification embeds those lessons into future cycles.

Every pass through the loop compounds trust, precision, and performanceWhatever it is, the way you tell your story online can make all the difference.

Build the Right Environment Before You Scale

Before launching agents, build a secure foundation. Ask yourself:

Can I tell what the agent did, when it did it, and why?

If the answer is no, stop. You’re not ready.

Without governance and explainability, speed becomes fragility disguised as progress.

Security Is the Foundation, Not the Afterthought

Security for agentic systems isn’t a layer, it’s the architecture.

Identity & Access Management for Agents

Give each agent unique credentials scoped to specific tasks. No shared logins. Every action must be attributable.Auditability by Design

Log everything—prompts, responses, and actions. You need to distinguish between human, agent, and malicious activity, as much as possible.Explainability & Transparency

An agent’s reasoning path must be visible. Opaque behavior isn’t innovation, it’s risk.Continuous Validation

Let agents run in short cycles (20–30 minutes). Review for accuracy, ethics, and security before green-lighting the next step. Use specialized agents rather than generalist ones. When each agent has a defined, narrow scope you reduce both the risk of deviation (straying from intent) and the degree of deviation (the impact when it happens).This modular approach mirrors sound engineering practice: isolate functions, validate outputs, and contain errors before they cascade. Together with environment isolation and human oversight, continuous validation is the feedback loop that keeps speed aligned with control.

Environment Isolation

Run agents in sandboxed environments until they’re proven safe, then segment them once deployed so each operates within a clearly defined boundary. Keep production data and privileges tightly scoped, and design your architecture so you can quarantine or shut down a single agent without disrupting the entire system.Segmentation here is architectural—it’s how you contain operational impact. Later, insider-risk discipline handles the human and behavioral side of containment.

Human-in-the-Loop Approval Gates

Every high-impact action whether deployments, data writes, or user-facing changes should pass a human review.

This is how you stay fast and deliberate.

Insider-Risk Discipline, Reimagined for Agents

The same principles used to mitigate insider threats apply here. Treat agents like powerful contractors: trusted, but verified.

Least privilege: Each agent gets only what it needs. Rotate and expire keys frequently.

Segmentation: Isolate agents by task or function to prevent lateral movement.

Behavioral baselining: Define normal patterns, and investigate drift early.

Dual control: Require human approval for sensitive or destructive actions.

Culture of reporting: Encourage your team to flag anomalies just as they would insider risk.

These aren’t new controls—they’re proven governance, adapted for a new kind of digital teammate.

A Secure-by-Design Workflow

Adapted from Cora’s compound engineering approach, hardened for security and scale:

Define the objective. Write a clear task description and expected outcome.

Threat-model the task. Ask what could go wrong if the agent fails or is exploited.

Assign limited-scope agents. Use separate agents for planning, execution, and review, each with minimal privileges.

Validate the result. Check correctness, bias, and data safety before promotion.

Codify feedback. Capture your corrections so the next cycle starts smarter.

Log and monitor everything. Treat auditability as the system’s nervous system.

Speed and accountability can coexist—if you design for both.

Learning Loops and Exploratory Leadership

Compound leadership uses AI not to replace human thought but to amplify it. Agents can explore multiple hypotheses in parallel, surfacing patterns leaders might miss. Every run, success or failure, feeds the next, transforming experience into an evolving body of institutional knowledge.

The goal isn’t just to collect insights; it’s to make human judgment scalable—so that every cycle of governance, delegation, validation, and learning compounds organizational intelligence.

Stay in the Loop Without Losing Speed

The goal isn’t full automation. It’s graduated autonomy: delegation without detachment.

Start small: let agents run limited cycles, review outputs, and expand autonomy as trust builds. Maintain visibility through dashboards and alerting, but don’t micromanage.

You don’t have to read every line of code or prompt, just ensure oversight loops remain intact and explainability stays high.

You can’t govern what you don’t understand. Staying in the loop means preserving comprehension, not just control.

The Future of Compound Leadership

Managing agents is leadership, not logistics. It demands clarity, foresight, and discipline.

Compound leadership means:

Scaling judgment, not just execution.

Building environments where speed and safety reinforce each other.

Treating AI agents like collaborators, learning from them while keeping them accountable.

Designing security-first systems where explainability, validation, and traceability are nonnegotiable.

In the end, the technology is not the differentiator, leadership is.

Compound leadership isn’t just how you manage AI; it’s how you evolve your organization’s intelligence. Each decision compounds the next, turning governance into momentum and foresight into advantage.

The future belongs to leaders who can move quickly and wisely—managing humans and machines as one compound team, anchored in security, transparency, and trust.

That’s compound leadership.

And it’s how you scale, without surrendering control.

For a quick-reference version of these ideas, download the Compound Leadership Cheat Sheet—a practical guide to applying this framework across teams, technologies, and decisions.

Concept of “Compound Engineering” originally introduced by Kieran Klaassen of Cora in the This New Way podcast (Oct 9, 2025).

This article first appeared on Command Line with Camille. Follow on substack for more insights.